At Render, most of the apps we use are web apps. We use Slack for chat, Linear for issue tracking, Slab for knowledge sharing, and GitHub for change management. Render is itself a web app. But there is a notable exception: my development environment. The work I do in dev is saved not to the cloud, but to my local file system. Those bytes never see the inside of a fiber-optic cable, at least not until I push my changes to GitHub. Even if I push frequently, I'm still missing out on the benefits we've come to expect from modern web apps. I can't get work done unless I'm on my own machine, and I can't easily share or duplicate my environment after I've configured it to my liking. And I've spent a lot of time configuring it to my liking. My settings.json file for VS Code is 1026 lines long.

For web developers, hosted dev environments have additional benefits. You can get on-demand access to containers or virtual machines running any version of any operating system, loaded with whatever packages you need, and backed by practically infinite compute and storage. Developing in the cloud narrows the gap between your dev and production environments. This means you can have more confidence in tests that run in dev, and will tend to have fewer unwanted surprises when you deploy to prod. You can share a live preview of your app without needing to use ngrok, and let trusted collaborators take your environment for a spin.

For web development teams, having standardized dev environments can increase velocity and security. It becomes easier to onboard new teammates, maintain dev dependencies, and share productivity improvements. The environment's definition can be version controlled, meaning changes undergo code review.

With these benefits in mind, I endeavored to set up a hosted dev environment for myself. I imposed two constraints:

- It should be hosted on Render. There are a growing number of commercial products in this space, but self-hosting with Render gives me more flexibility and control. I spend a lot of time in my dev environment, so I want the freedom to customize the experience to my liking. Also, I work at Render, and I love using our product.

- It should work with VS Code. 71% of the developers who responded to a recent StackOverflow survey indicated that they use VS Code, if not exclusively, then at least part of the time. As you probably deduced, I count myself among this majority. (I don't write 1000+ line config files just for fun.) I want my setup to be something that I, and many others, would actually consider using.

What follows is my firsthand account of what it took to set up a hosted dev environment. Read on if you're interested in doing the same, if you like thinking about dev tools, or if you're curious to learn about Render, Tailscale, adding persistent storage to a server instance, running an SSH server in an unprivileged container, or why you shouldn't auto-update VS Code extensions.

If you'd like to see the steps needed to recreate my dev environment on Render, skip to the "recipe" at the end.

Up and Running

I recently heard about an open source project called code-server, which is maintained by the people behind Coder, and lets you access a remote VS Code process from the browser. Deploying code-server to Render feels like a good place to start my exploration. I'm excited to discover an additional repo that helps with deploying code-server to cloud hosting platforms. The repo includes a Dockerfile, so I suspect we can deploy it to Render without any changes.

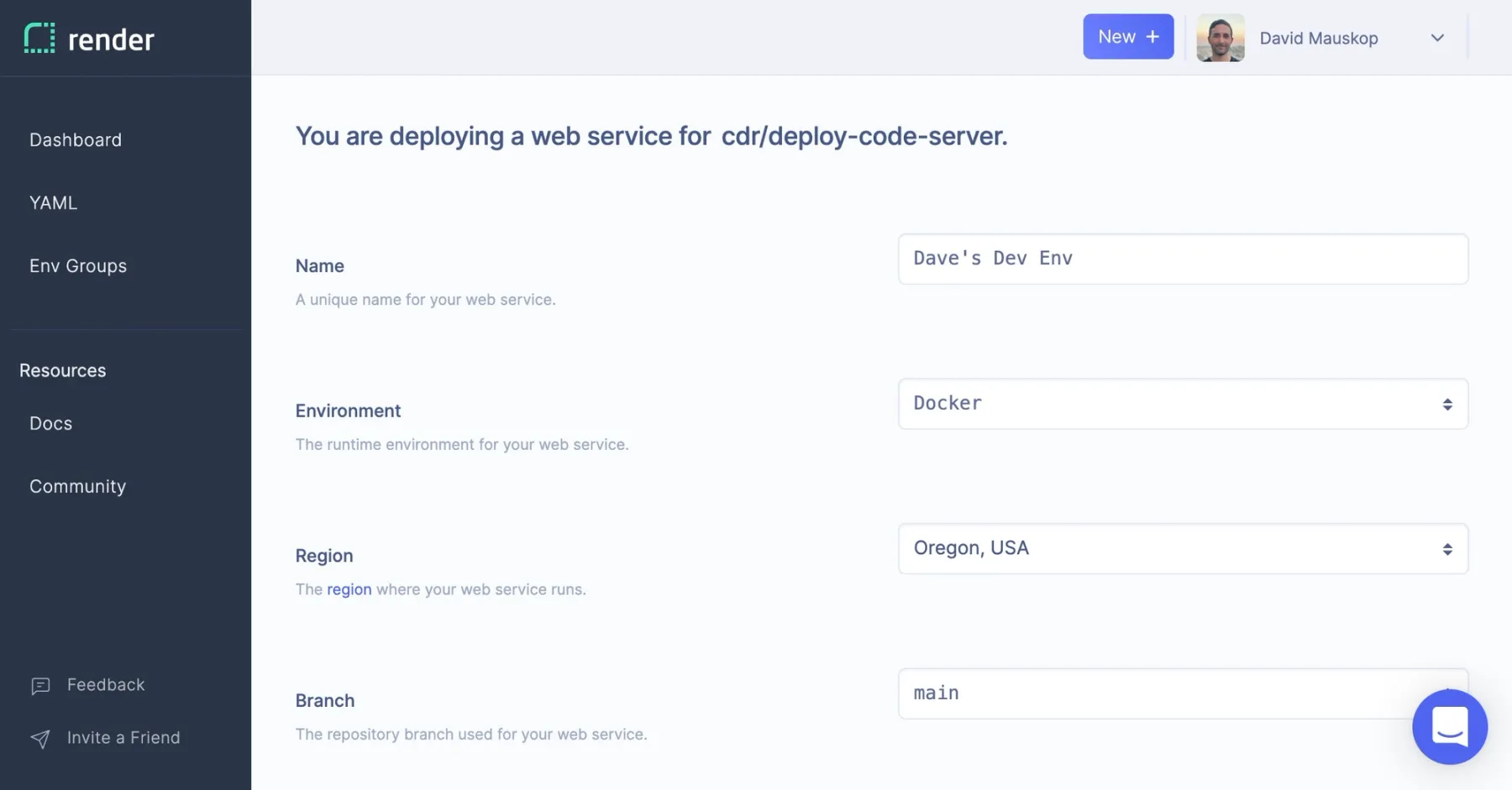

I create a new web service in the Render Dashboard and paste in https://github.com/cdr/deploy-code-server on the repo selection

page. I click though to the service creation page and Render auto-suggests Docker as the runtime environment for my service. I name my service — Dave's Dev Env — and click "Create Web Service". Within a couple minutes Render has finished building the Docker image and made my code-server instance available at daves-dev-env.onrender.com. Subsequent builds will be even faster since Render caches all intermediate image layers.

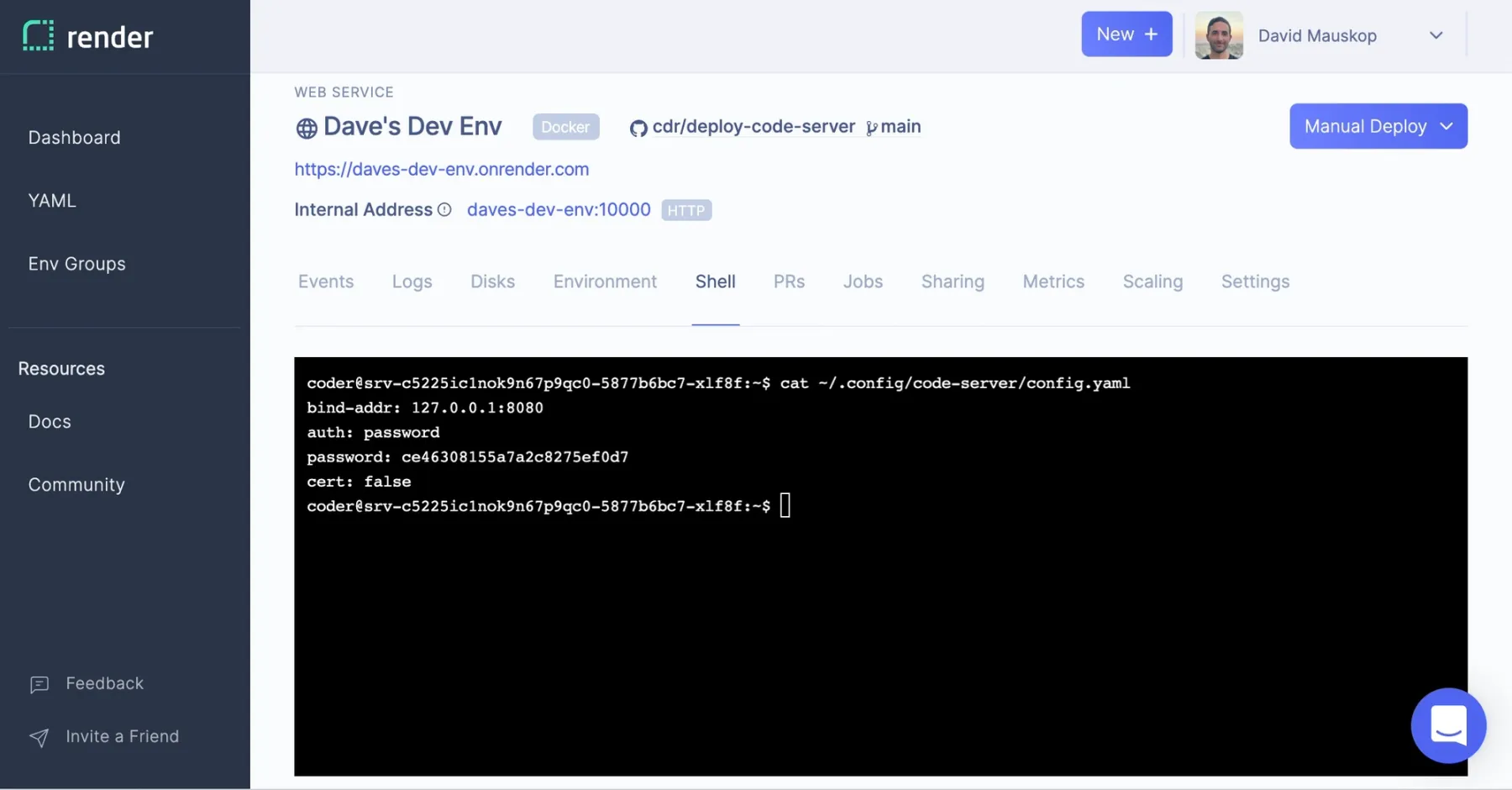

I visit the URL for my web service, and am prompted to enter a password. By default code-server randomly generates a password and stores it as plaintext on the server. This isn't secure, so I'll avoid doing any serious work for now. I have some ideas for how we can make this more secure later.

I switch to Render's web shell and run cat ~/.config/code-server/config.yaml to get my password. Just like that, we're up and running!

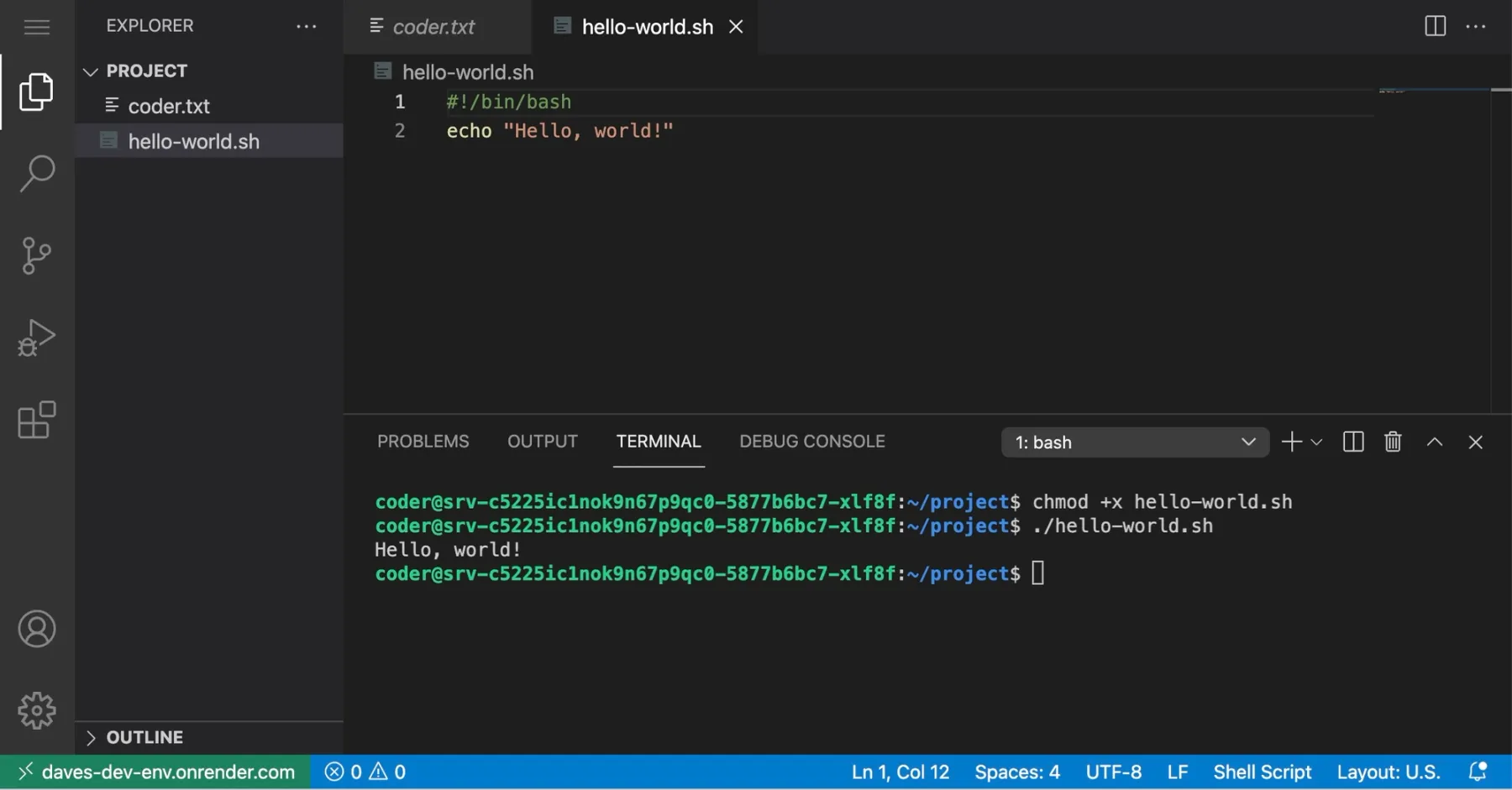

From within the browser, I can write programs and pop open VS Code's integrated terminal to run them in the cloud. To confirm this, I write a "Hello, world" bash script.

I don't notice any lag compared to using VS Code locally. I suspect the text editing and processing (e.g. syntax highlighting) is happening mostly in the browser, and the frontend communicates with the server when it needs to do things like load or save a file, or run a program from the terminal. I refresh the page and am happy to see that my editor state has been saved. I'm back where I left off, editing hello-world.sh. I visit the same URL in another browser tab and start making edits to the file. My changes are synced to the other tab whenever I stop typing, even if I don't save.1

It's at this point that I make an exciting discovery in my browser's address bar: code-server is a progressive web app (PWA). I click the "install" icon, and can now use code-server as if it were a native app. Getting rid of the browser frame really does make a difference. Now it feels like I'm developing locally. I add echo "PWAs FTW!" to my bash script.

Securing code-server

I miss my config. I've remapped so many keys that I'm flailing without my settings.json and keybindings.json files. But I don't want to invest more in configuring my code-server instance until I figure out a way to make it secure.

I do what I should've done at the outset and consult the code-server docs. The section on safely exposing code-server recommends port forwarding over SSH. This sounds like a nice option, but Render services run in containers without built-in SSH access. This is a common request among Render users, and we expect to offer it soon. But, alas, it's not here yet, so we need to find a workaround.

We can run an SSH server in the same container as code-server, but Render web services only expose one port to the public internet, and it's assumed to be an HTTP port. That's okay. Why open our server to the internet at all, when we can access it via a VPN?

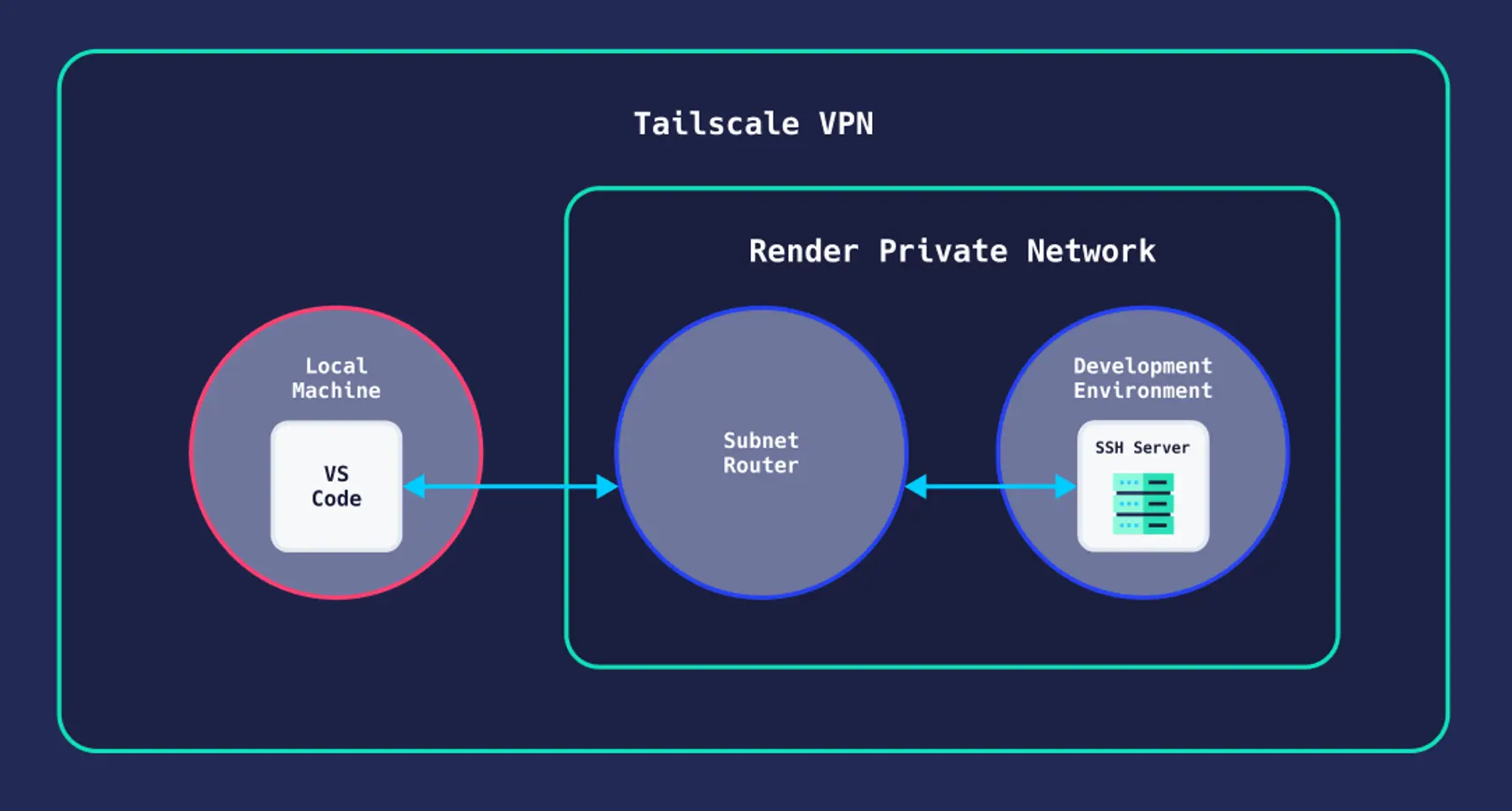

I've been curious about Tailscale for a while, and now a use case for their "zero config VPN" has presented itself. Render's developer advocate, Chris, and founder, Anurag, were recently in touch with the Tailscale team to figure out the best way to use Tailscale on Render. It's possible to run Tailscale in the same container as a Render service, but we found the experience smoother when we deployed a tailscale subnet router as a standalone Render private service. Either way we need userspace networking mode.

Each Render user and team gets its own isolated private network. By default, the subnet router will make everything in this network part of my Tailscale network. Knowing this, I create a separate Render Team for my dev environment. I sign up for Tailscale, install it on my local machine, and generate a one-off auth key. Then I click the magic "Deploy to Render" button and enter my auth key when prompted. I enable the subnet route in the Tailscale admin panel, and can now access internal IP addresses from my Render private network on my local machine.

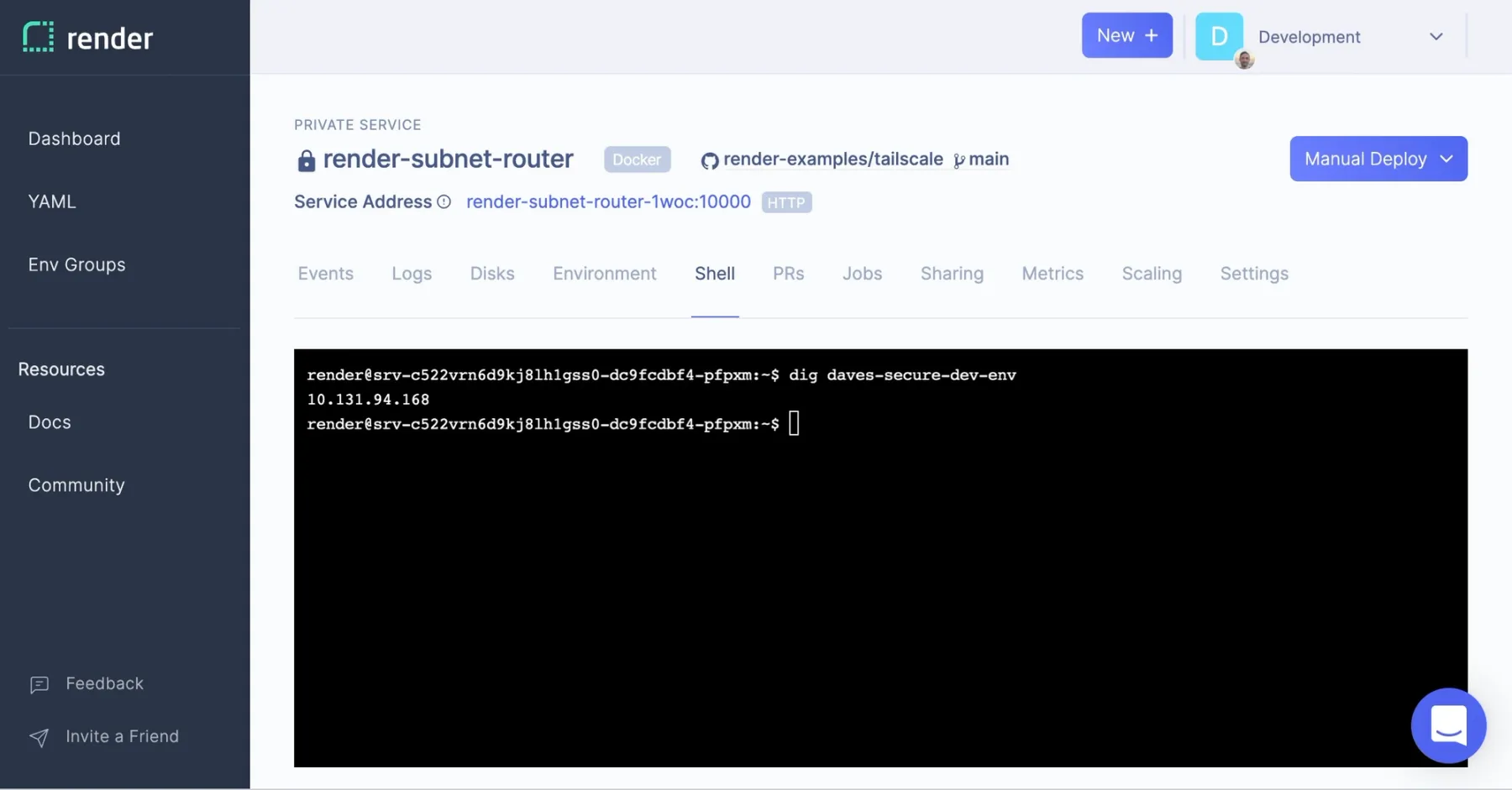

Since I no longer need code-server to run on the public internet, I deploy it as a new Private Service, Dave's Secure Dev Env. Then I go to the shell for my subnet router service and run dig with the internal host name for Dave's Secure Dev Env. This shows me the service's internal IP address. Now I can securely connect to the HTTP port code-server listens on. I didn't even need to set up an SSH server!

My browser doesn't appreciate how secure this setup is. It refuses to register code-server's service worker because we're not using HTTPS. It makes no difference from the browser's perspective that this traffic is being served within a secure VPN. I generate a self-signed certificate, as the code-server docs suggest, pass the certificate and key as arguments to the code-server executable, and instruct my browser to trust the cert. We're back to using HTTPS, and functionality that depends on the service worker is restored.

Changing Tack

After taking the time to make it secure, I would really like to like using Dave's Secure Dev Env. Unfortunately there are extensions missing from code-server's reduced set that I've come to rely on. I turn back to the idea of using SSH, but this time with Microsoft's proprietary version of VS Code and its Remote-SSH extension.

I need an SSH server. I try OpenSSH, but run into an issue when I attempt to log in. OpenSSH servers use privilege separation as a security measure. The OpenSSH subprocess that handles authentication runs as an unprivileged user with its root directory set to some safe location. The CAP_SYS_CHROOT capability is required to change the root directory for a process, but Render services run in unprivileged containers without this capability. I discover that there are other SSH servers, like dropbear, that don't do privilege separation. This is great because it's not necessary for my use case anyway. My SSH server will run as a non-root user and will only be available within the VPN.

I create a new repo and add a Dockerfile that starts a dropbear SSH server. After testing the image locally, I deploy it to Render as a Private Service, Dave's Second Secure Dev Env. I want to use key-based authentication, so I insert my public key into the environment as a Secret

File named key.pub. Public keys aren't secret, but this is still a nice way to manage config files that I don't want to check into source control.

As before, I go to the web shell for the subnet router to look up the internal IP address with dig daves-second-secure-dev-env. Once I verify that I can connect via SSH, I add an entry to my SSH config file for this host.

The Remote-SSH extension makes a good first impression. It shows me a dropdown with the hosts I've defined in my SSH config file, and then uses scp to install VS Code on the host I select, render-dev-env. I'd be very hesitant to allow this on a production instance, but for dev it's pretty convenient. Many of my extensions can continue to run locally, and with a couple clicks I install those that need to run on the remote server. In a few cases I change the remote.extensionKind setting to force an extension to run as a "workspace" extension, i.e. remotely.

I'm feeling good. I have VS Code running remotely within a secure private network and am connecting to it using a secure protocol. All my extensions and config are there. I can access my environment from any machine with VS Code installed, which isn't quite as good as any machine with a browser installed, but is good enough for me. I still get all the other benefits of remote development. As an added benefit, I can now use any of the many tools that are designed to work with the SSH protocol.

I just need a way to authenticate to GitHub and I'll be ready to do some real work. One reasonable approach would be to inject a password-protected SSH key into the environment as a Secret File. Since we're connecting via SSH, it's also possible to use agent forwarding, and avoid storing the key on the remote filesystem entirely. I like this approach, but need to be careful. The SSH agent may be holding on to multiple keys, some of which I don't need to forward, and, following the principle of least privilege, therefore shouldn't.

Adding Persistence

Render services have ephemeral file systems, with the option to attach persistent disks. Ephemeral storage is often desirable, especially for services that are meant to be horizontally scaled.

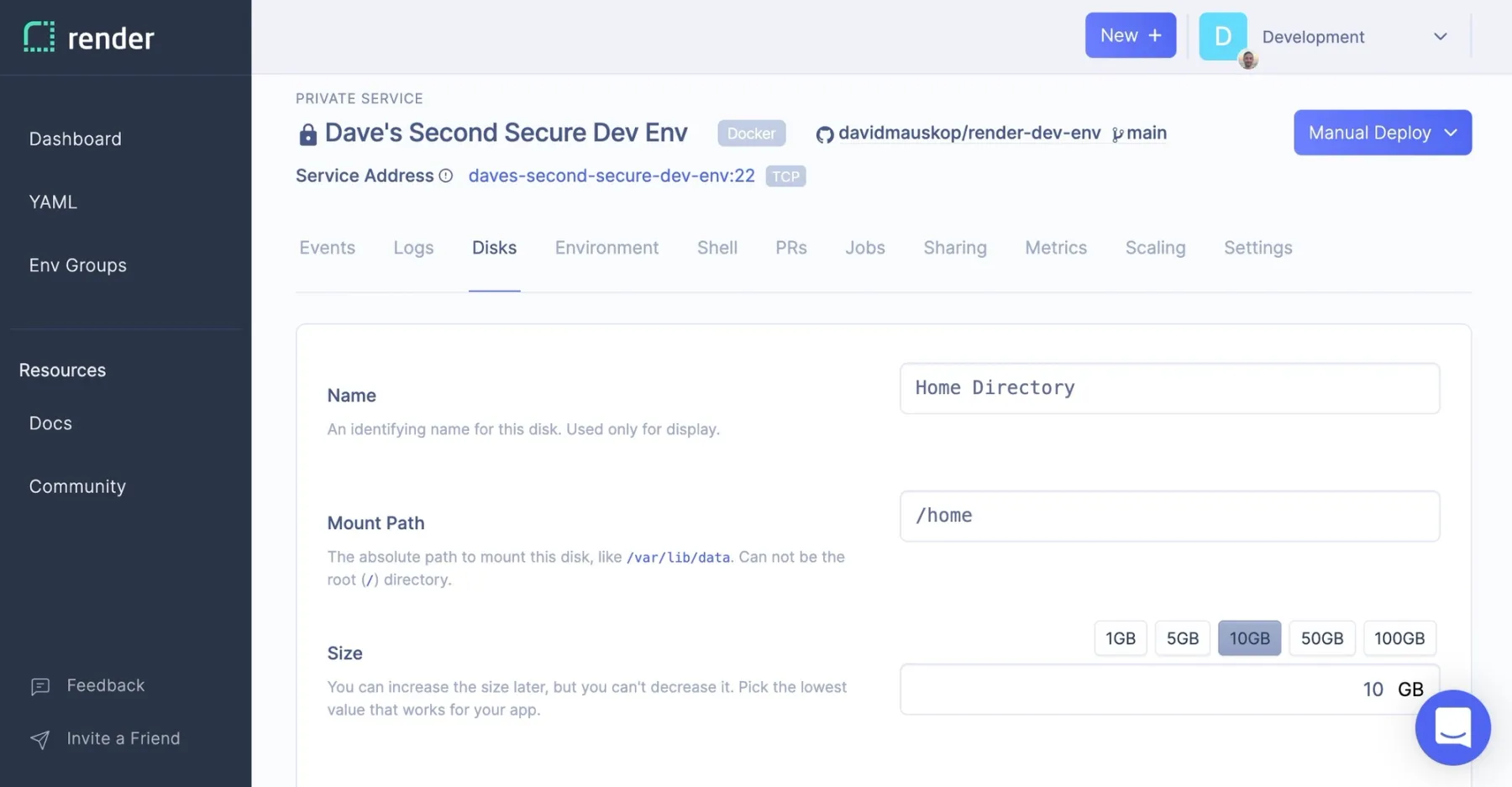

Development environments are different. In dev, I store all kinds of things on the file system, things it would be nice to keep around: changes to git repositories I haven't pushed yet, node_modules packages, various caches, and my bash history, to name a few. I create a Render Disk and mount it at /home:

Now everything in my home directory will be persisted across deploys. This includes the VS Code binary and extensions. I could have added these to the container image, but I don't need to bother now that I have a persistent disk.

There's just one catch: there may be some files under /home, like /home/dev/.ssh/authorized_keys, that are defined as part of the build and deploy process, and should therefore not be persisted.

When Render builds and deploys a service, it performs roughly these steps:

- Build the service. For Docker services this means building a container image based on the Dockerfile provided.

- Copy the build artifact into the context where the service will run.

- If the service has a persistent disk, mount it at the specified path.

- Start the service.

If we mount a disk at /home (step 3), this will overwrite everything the final built image has in that directory (step 1). We can address this by creating /home/dev/.ssh/authorized_keys not at build time, but at run time (step 4).

I write this start script:

And update the Dockerfile to use it:

With this setup we have the flexibility to choose which files should be persisted across deploys, and which should be defined as part of the deploy. More concretely, I can rotate my public key from the Render Dashboard by editing the contents of key.pub.

Customizing the Environment

I need some packages. I resist the urge to install every programming language and utility I've ever used. Hosted dev environments are cheap to spin up and down, so each project can have its own custom-tailored environment. It's defeating the purpose if I throw everything but the kitchen sink into a single environment, and use it for all my projects. This approach might be nice at first, but I'd eventually run into version compatibility issues.

I imagine I'm working on a full-stack javascript app that uses a managed PostgreSQL database and a Redis cache, and update my Dockerfile to include these packages:

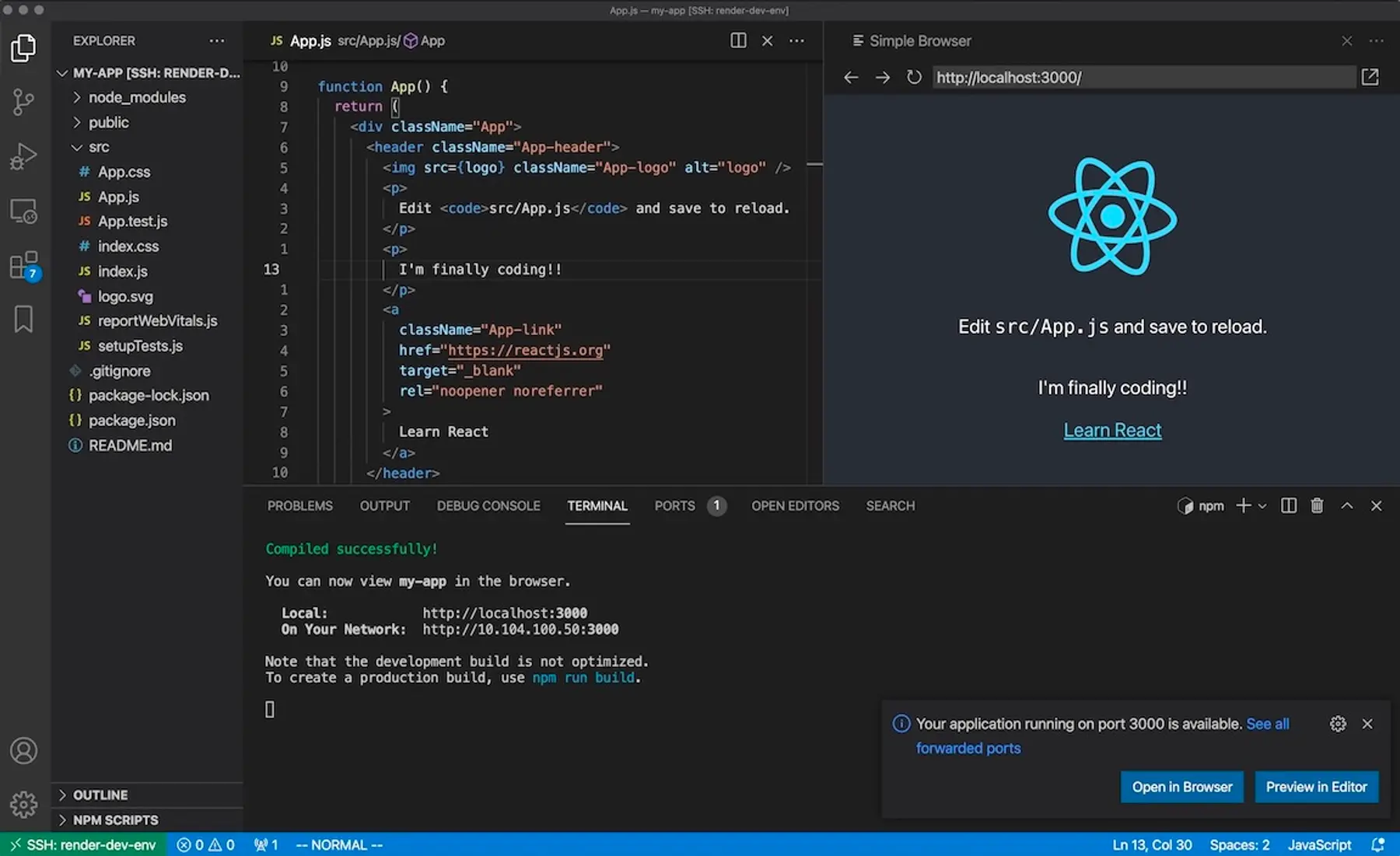

With node and git installed, and with SSH agent forwarding in place so I can authenticate to GitHub, I finally start coding.

I run npx create-react-app my-app, create a GitHub repo, and push an initial commit with the React starter code I generated. When I start the development server with npm start, the Remote-SSH extension automatically detects this, sets up port forwarding, and pops open a browser tab. I can also preview the app within VS Code. Hot code reloading works as normal. This feels just like the local experience I'm used to.

My service is running on a starter plan, and already I'm running out of RAM. I change to a larger plan, and after a quick deploy, have access to more memory and CPU. I'm also spending more money, but I can suspend the service at any time and Render will stop charging me that second, until I resume the service. This means there's no additional cost if I want to maintain multiple dev environments, one for each project I have up in the air at any given point in time.

From here, I can customize to my heart's content. As a next step, I might clone my dotfiles repo and install, for example, my .bashrc and .vimrc files. As my project grows in complexity, perhaps to include multiple git repos, I can write a script that clones all my repos and gets everything primed and ready for development. I can create a small managed database for dev, or deploy dev versions of all my microservices as Render services on smaller instance types. This is similar to how we develop Render. We have a script that scales down the dev versions of whatever services are under active development, and starts them running locally.

When my app is ready for production, I can either deploy it using one of Render's native environments (in this case Node) or strip out the dev-specific dependencies from my Dockerfile and deploy my app as a Docker service.

Takeaways

Render is typically used for production, staging, and preview environments. It's a viable option for dev environments, though not without some points of friction. Some of these will be smoothed out when we start offering built-in SSH, others as VS Code's Remote-SSH extension approaches v1.0. There are other editors with more mature SSH integrations. Spacemacs comes with built-in functionality that lets you edit remote files just as easily as you'd edit local files.

There are always security risks when you put sensitive information in the cloud, but hosted dev environments actually have some security benefits. Defining your dev environment in code that can be reviewed and audited will tend to improve your security posture. Shine a light on your config.

Beyond that, hosted dev environments have better isolation. Take a look at the ~/.config directory on your local machine, and you may be surprised at all the credentials that various CLI tools have quietly stuffed in there. This is especially problematic when you consider how often modern developers run untrusted code that can both read their local file systems and make network requests, whether it be an npm package or an auto-updating VS Code extension.2 We can construct our hosted dev environment so that it doesn't store any credentials on the file system. In these single-purpose environments it may also be more feasible to set up restrictive firewalls that make it harder for malicious code to "phone home" with sensitive data. Keep in mind that creative exfiltration methods will always be possible. For example, if your firewall allows outbound access to GitHub or the public npm registry, an attacker could send themselves information by generating public activity. Still, a firewall for outbound connections is a useful defense that could end up saving your neck.

Am I ready to move all my development work into the cloud? Not quite. There's still too much friction for me, though I expect the situation will continue to improve. I'm glad to have remote development as a tool I can use in certain situations, like if I'm working on a project that needs to run in a very specific environment. The model we saw here—a GUI that runs in the browser or as a local app, connected to a remote service—does work very well for more specific development tasks, like querying a database or managing infrastructure. I'm excited to see new functionality emerge as the web app-ification of software development continues. I can imagine new kinds of collaboration, simplified testing and development flows, and more user-friendly observability tools. I know there are more possibilities that I can't yet imagine.

I hope that answers some questions about remote development, and opens up some new questions. Like, now that I have this dev environment, what the heck do I build?

Steps to Reproduce

This setup has some rough edges and is not ready for serious use. For example, our SSH server's host keys change every time we deploy.3 With that caveat, if you'd like to try this out for yourself, here are the steps to take. Steps 2, 3, and 5 won't be necessary after we release built-in SSH.

Let me know if you find ways to make this setup more reliable or configure a totally different dev environment on Render. I'll link to my favorites from this post.

-

Create a new Render team for your development work.

-

Create a Tailscale account, and install Tailscale on your local machine. I sometimes had to disconnect and reconnect Tailscale after waking my computer from sleep. There's an open issue tracking this.

-

One-click deploy a Tailscale subnet router into your new team's private network. Generate a one-off auth key from the Tailscale admin page and enter it as the value for the

TAILSCALE_AUTHKEYenvironment variable in the Render Dashboard when prompted. Once the service is live, go to your list of Tailscale machines and approve the10.0.0.0/8subnet route. You may want to narrow this down to a more specific route after step 5. -

Create your own repository using my Render dev environment template and one-click deploy it to Render. When prompted, enter the public SSH key for the key pair you want to use to access your environment.

-

Once the service is live, go to the web shell for your subnet router service and run

digwith the host name of your dev environment service as the only argument. Copy the internal IP address that's returned. For example: -

Add an entry to your SSH config file (normally located at

~/.ssh/config), substituting in the10.x.x.xIP address you copied in the previous step.You may want to add the location of your private key to the config file with, for example,

IdentityFile ~/.ssh/id_rsain the indented block. If you'd like to always use SSH agent forwarding, addForwardAgent yes. It's safer to use agent forwarding only when you absolutely need it. From the terminal, you can runssh -o ForwardAgent=yes render-dev-env. -

Connect to the environment from a terminal, or from your preferred editor. For VS Code, install the Remote-SSH extension, run the

Remote-SSH: Connect to Hostcommand, and chooserender-dev-envfrom the dropdown menu. After VS Code installs itself, you're good to go.

Happy coding!

Footnotes

-

I was also curious to see how code-server resolves conflicts, so I turned off WiFi and make conflicting edits to

hello-world.shin the two tabs. In one tab I added:And in the other:

I turned WiFi back on and saw that the remote development comment replaced the local development one. (I guess that settles the debate.) code-server is probably using a last-write wins policy to keep conflict resolution simple. ↩

-

There's an open issue that proposes a better security model for VS Code extensions. ↩

-

We could address this by storing the host keys as Render Environment Variables or Secret Files. ↩