ReadMe provides powerful, feature-rich API documentation for 5000+ companies (including Akamai, Gusto, Scale AI, and Render) with a lean team that includes just 20 engineers. Of those 20, only one engineer—Ryan Park—focuses entirely on ReadMe’s underlying infrastructure. Ryan joined ReadMe three years ago, after roles in infrastructure at places like Pinterest, Slack, and Stripe.

Ryan recently led the migration of ReadMe’s infrastructure from Heroku to Render. We chatted with Ryan to learn more about the team's migration process: from their initial goals, through tactical steps that included taking inventory of their services and load testing a proof of concept, all the way to their final cutover.

Let’s dive in!

ReadMe’s whimsical, human-centered engineering culture

Render: As a quick intro, tell us a bit about yourself and ReadMe. What might we find surprising or unique?

Ryan: One thing people may not know about me, as an infrastructure engineer, is my background is in Human Computer Interaction (HCI). I always think of infra problems as user experience problems too. How do we provide friendly and easy-to-understand tooling?

My point of view on engineering fits well into the culture at ReadMe. One of our values is “Always Do What’s Human.” One way this shows up is in our decision to rely on managed service providers like MongoDB Atlas and Render. This means our infrastructure—and our engineers—can rely on a first line of defense to keep infrastructure running. Our on-call has been very lightweight compared to the industry.

Another ReadMe value is to “Err on the Side of Whimsy.” In practice, we bring a surprising amount of creativity to what we do. Day to day, we see it in everything from our office space to our offsites. At an offsite last fall the entire team made music videos—with our CEO and COO leading the charge!

Why migrate from Heroku

Render: Why did you decide to move off of Heroku?

Ryan: ReadMe had been running on Heroku from the beginning—eight years. We decided to look for a new provider last year after Heroku encountered some well-publicized security and downtime incidents. We also wanted to partner with a hosting provider that’s investing more in hosting customers like us. In the last few years, Heroku’s been more focused on expanding their integration with Salesforce.

Ideal platform: easy to learn, innovating, and enterprise-ready

Render: What were you looking for in a new platform? Why did you choose Render?

Ryan: We were looking for a platform with a model similar to Heroku’s: a managed service where our developers could spin up services and maintain them on their own. Most of our team focuses on product engineering, and we wanted the learning curve for them to be as low as possible.

We looked at Amazon’s different managed platforms, but the number of different systems involved just to have a running web service was pretty massive. Developers would have to think about provisioning SSL certificates and load balancers.

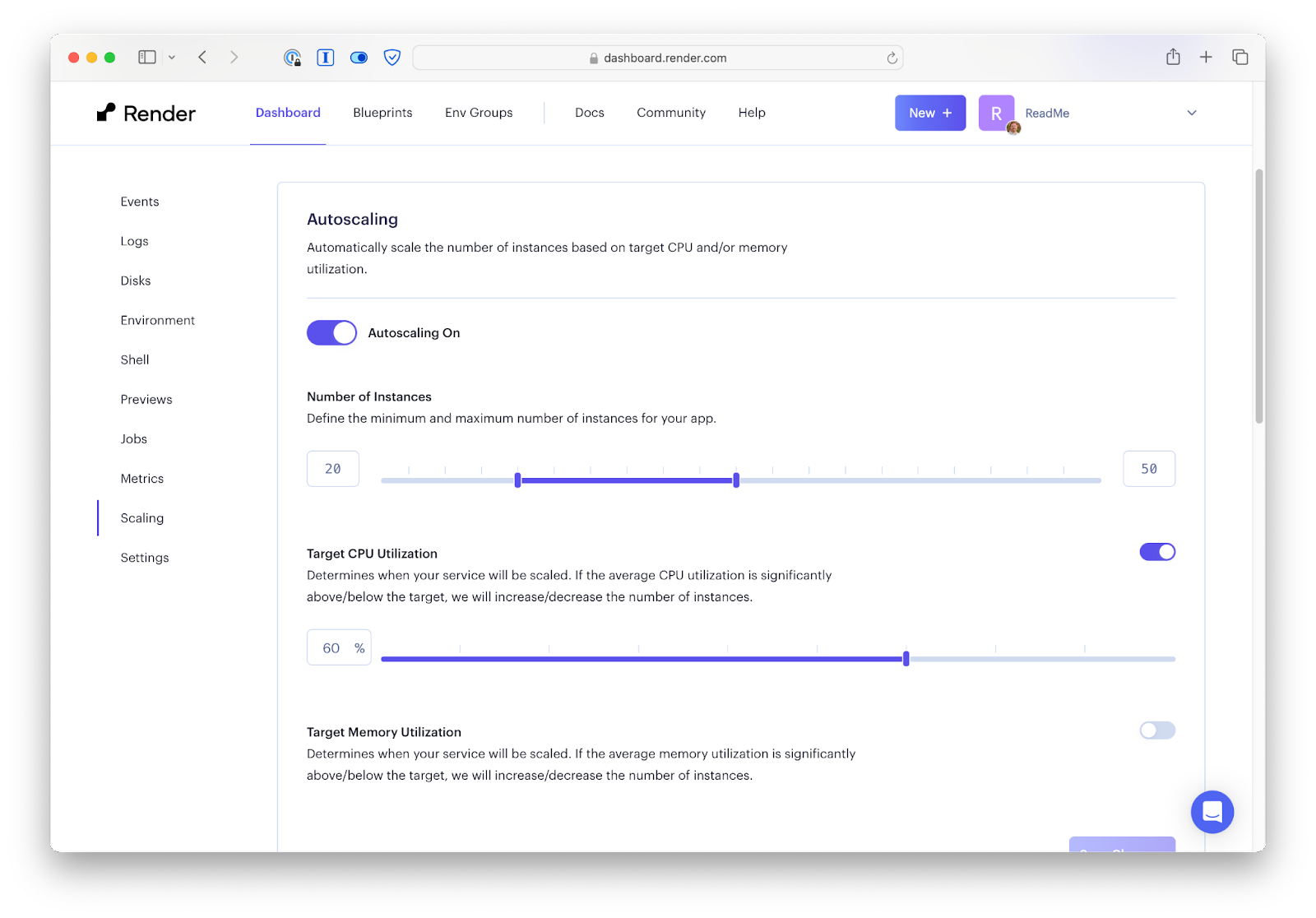

Render abstracts a lot of that. A Render service looks a lot like a Heroku service in that we can spin it up easily and give it a custom domain if we want, or change the scaling settings. We can easily scale up our main monolith web application to the 20 to 40 instances we run. Otherwise, we’re mostly just writing our web app (in our case, it’s a Node.js app). Yes, things can still go wrong, but there are fewer knobs to turn, and fewer things you’re expected to be aware of.

We looked at some other providers. Render seemed to be enterprise-ready and to have the most mature feature set, plus SOC 2 certification. Render also seemed to be moving quickly and adding new capabilities in a way that Heroku hadn’t been for years.

Kicking off the migration: taking inventory

Render: What was the first step you took after you decided to migrate to Render?

Ryan: The first thing we did was take an inventory of everything we had running on Heroku.

During this inventory, we identified services that could require special attention. For example, there were a few services we found we no longer needed, so we decided to shut them down entirely instead of moving them. There’s also a service we’ve kept running on Heroku, because it uses an architecture we don’t want to continue to invest in.

Doing this inventory was really helpful. We saw, for example, that a lot of our services use Redis. We wanted to use Redis in the most Render-native way, so we migrated those instances to Render-managed Redis. On the other hand, most of our data is stored in MongoDB Atlas, and we only had one service that used a PostgreSQL database. This was the service we decided not to migrate, because we would rather replace it.

Proof of concept with the ReadMe monolith

Render: What steps did you take to derisk the migration?

Ryan: After taking the inventory, we got our main monolith application up and running on Render as a proof of concept. This monolith powers everything our users interact with. We expected it to be the most critical and challenging service to migrate.

We got the proof of concept up and running pretty quickly. That helped us find differences between Render and our current setup on Heroku. At this phase, we planned the biggest pieces of the migration.

Here are some items we worked through:

- Region: Our MongoDB Atlas databases were originally hosted in AWS us-east-1. Render doesn’t currently support that region, so we knew we’d need to migrate our databases to a region Render does support (we chose Oregon).

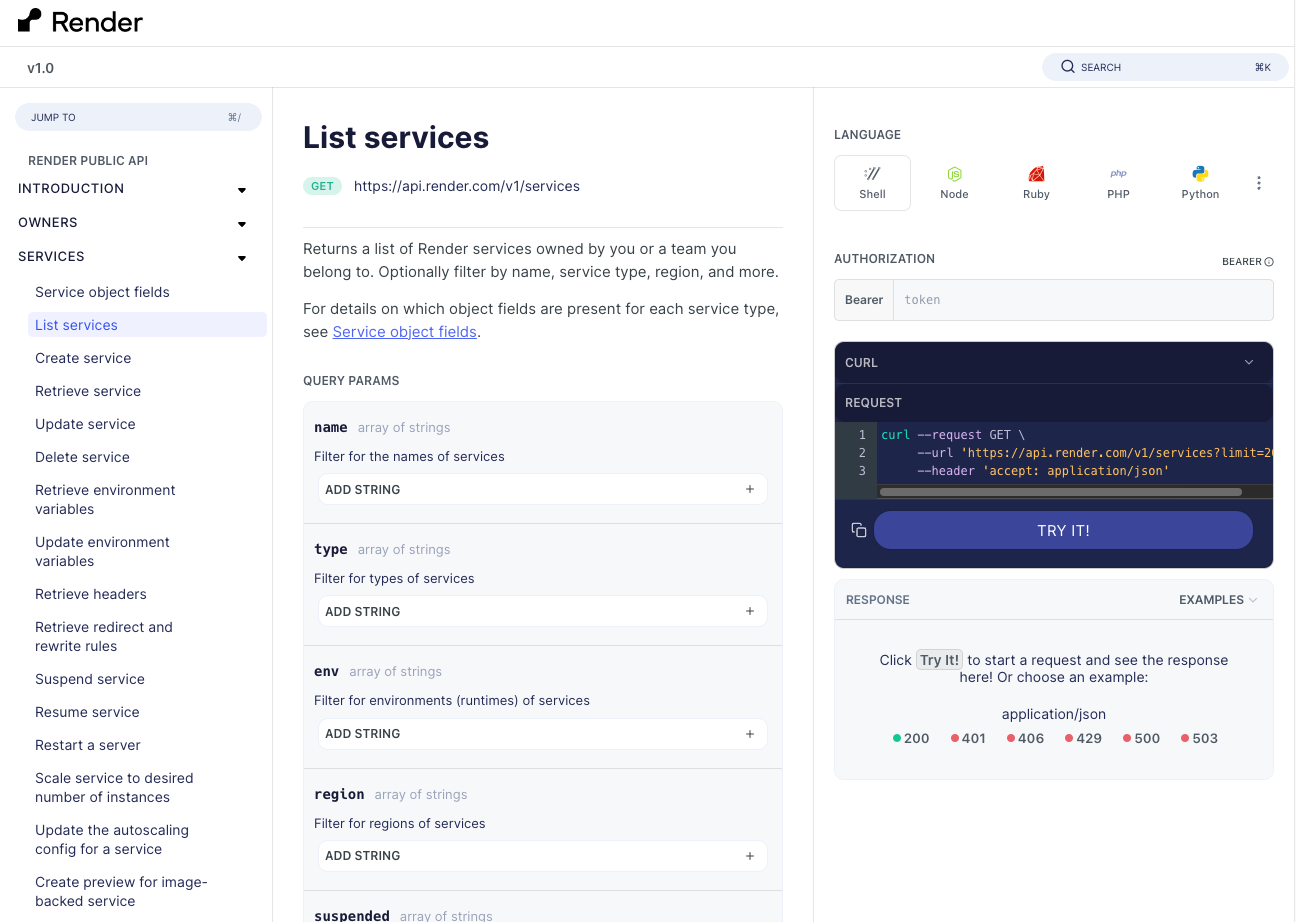

- APIs: There are differences between the Heroku API and Render API. In some cases, our automation relied on APIs that were different enough that we changed a process to be manual. The Render team has since addressed some of these gaps. In general, these cases were not serious blockers, and we’ve had a lot of communication with the Render team.

- Code dependencies on the platform: We knew there might be places in our code that, for example, logged a Heroku dyno name. The proof of concept helped us find code dependencies like this, update them if needed, and ensure they didn’t crash the app.

As a side note, I stressed to the engineering team at ReadMe that Render is different, and the differences would feel like pain points because we were used to how Heroku works. We had to keep in mind that we may feel some frustration in the first few months, and that we’re doing this migration for bigger reasons.

Load testing the proof of concept

Render: Did you validate the performance of the new systems as well?

Ryan: Yes. We load tested the proof of concept monolith.

We used the Grafana k6 load testing service and gave it URLs for a handful of different page types in our system (such as an API reference page and a getting started documentation page). We ran the test against both Render and Heroku and compared the times.

Some page types did appear to perform slower on Render, but the difference was minimal, and it wasn’t enough to stop us from moving forward. First, load testing is a science of its own, and we knew our approach may not yield perfectly accurate results. If we’d had the time, it would have been nice to migrate a portion of the overall traffic, and measure load times from our users’ point of view. We did something simpler. Our old system was in Virginia, and our new system was in Oregon. We chose to run our load tests from somewhere in the middle of the country. We ran the tests from GCP to ensure that they originated from outside where our MongoDB and Render instances were hosted.

Overall, we had greater reasons to do the migration. We also knew we could make up the measured difference through proactive performance improvements.

Migrating lower-risk services first

Render: It sounds like the monolith helped you figure out the hardest problems you would deal with. How did this play into the next step?

Ryan: When it came time to migrate, we started with the least critical services first, followed by the important-but-easy services. We saved the important, harder-to-migrate services for last.

To illustrate:

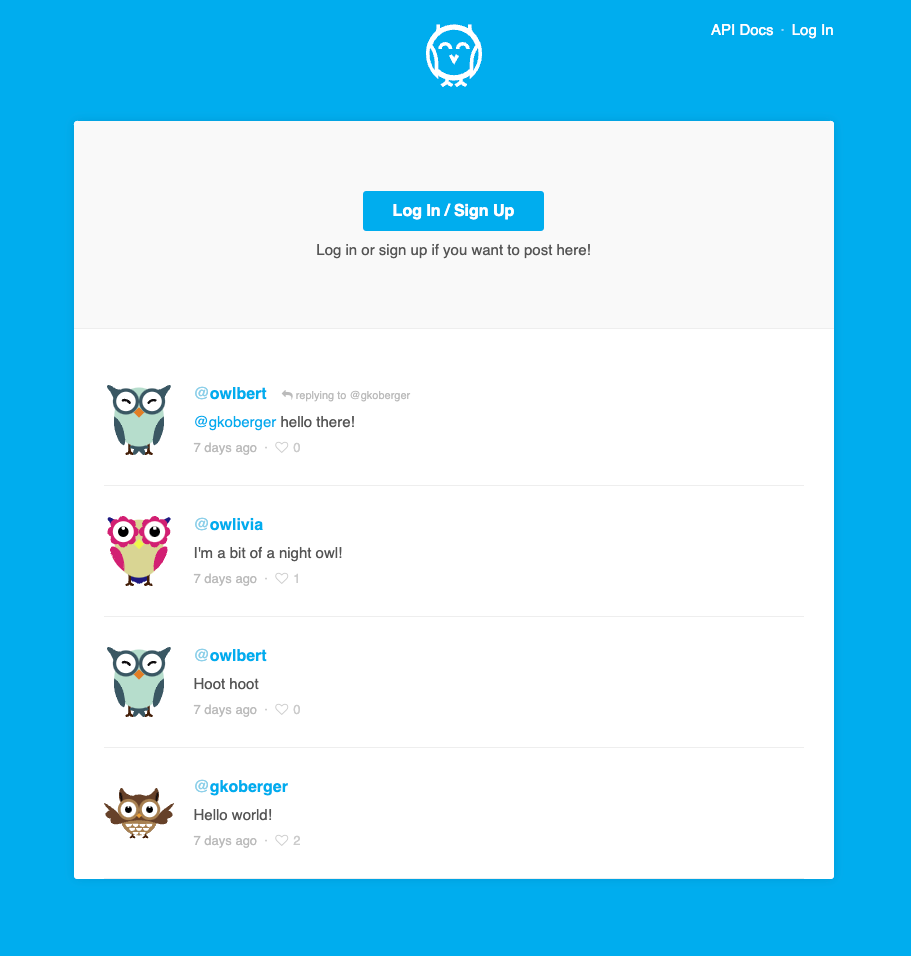

- A less critical service that we migrated early is Hoot, a Twitter clone we built to demo some of our API documentation features. It’s totally unimportant. It has no database, so every time you restart it, it resets all the data. The goal is just to be an API people can interact with through our interactive API explorer.

- A service that was important but easy to migrate is the marketing site for API Mixtape, the ReadMe conference. The conference happened around the same time as our migration, so it was important to keep the site up, but it didn’t have much complexity under the hood.

- The important and difficult services included Deploybert (our internal tool for automating deployments), along with the main monolith.

Migrating the monolith with minimal downtime

Render: How did you tackle the final migration of the monolith?

Ryan: First, I’ll call out that we performed the entire migration process for our dev and QA environments before doing the same for production.

We decided to migrate with a small downtime window. One alternative would have been to do a rolling transition where we’d migrate a small percentage of traffic, then increase that over time. But we weren’t able to do that with our existing infrastructure, and we knew we could keep the downtime short.

The main items we needed to tackle were:

- Moving our Heroku services to Render,

- Configuring the Cloudflare CDN in front of our application to point to Render as the origin server, and

- Moving our MongoDB instances on Atlas to a different region.

For the MongoDB migration, we moved our database instances from the Virginia region to Oregon, to be as close as possible to the Render services to minimize latency between them. This is an interesting piece to talk about.

We originally had three instances of MongoDB running in Virginia and talking to our Heroku services: one primary instance and two read-only followers. We could have switched over traffic by pointing our Render services in Oregon to the MongoDB instances in Virginia. However, this would have introduced too much latency for users.

What we wanted instead, was one point in time where we both switched the DNS to point to our Render service, and switched our MongoDB primary to be an instance in Oregon. This is what we did in our short downtime window.

Before the migration, we stood up three more read-only follower MongoDB instances in Oregon. During the cutover, we stopped writes to the primary instance in Virginia, and set the new primary instance to be one of the Oregon instances. Although we planned an hours-long maintenance window, there was only a 90-second period of hard downtime. This is how long it took for the instance in Oregon to be established as the new primary.

Favorite Render features

Render: Thanks for walking us through those steps! Now that you’ve been on Render for a while, what are some features you especially like?

Ryan: Here are a few that are top of mind:

- Docker support: We like that Docker-image-based workflows are well supported on Render. We specifically build our Docker images elsewhere, and Render supports fetching images from an external registry. Heroku’s Docker support felt secondary in comparison—it wasn’t fully integrated with their API, and there were undocumented features.

- Environment groups: Environment groups in Render may seem like a small detail, but they have been very convenient. They make a difference in our quality of life.

- Blueprints: We use blueprints all the time. Blueprints have been really easy for non-infra engineers to understand. They feel more comfortable making infra changes with Blueprints, compared to CloudFormation or Terraform.

Finally, this isn’t a feature, but I wanted to call it out: Render is a ReadMe customer, and it was nice to work with a company we already had a relationship with. We trusted that Render was invested in us.

Render: Thanks so much Ryan! Where can folks find you if they want to get in touch or learn more about ReadMe?

Ryan: Feel free to reach out to me on LinkedIn if you’d like to know more. ReadMe’s also actively hiring engineers who are excited to join us in shaping the future of API documentation.